Ok, I admit the title might sound a little bold.

Recently as a part of a bigger project we've been working on a piece of software that would need to generate an extensive, complex and nested hash structure, then compare it with another, previously cached version of the same structure and decide whether it's the same or different. The rest of the system has been written in perl thus logically we've selected perl for the implementation. Our hash comparison tool would be executed every 30 seconds and it had to perform well on a rather busy system. We knew that a brute-force approach of comparing each key and value recursively would definitely cause performance issues. It was time to do some cheating!

Cheating in Perl

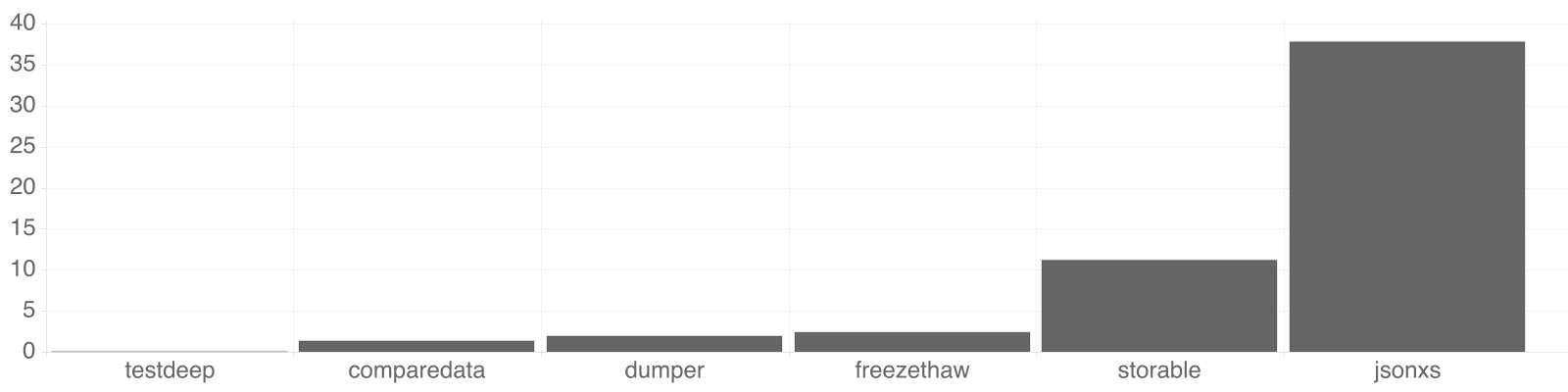

Fast forward to my findings, here is a table comparing various solutions for hash comparison:

Rate testdeep comparedata dumper freezthaw storable jsonxs testdeep 0.152/s -- -90% -93% -94% -99% -100% comparedata 1.45/s 855% -- -29% -42% -87% -96% dumper 2.04/s 1245% 41% -- -18% -82% -95% freezthaw 2.50/s 1547% 73% 22% -- -78% -93% storable 11.3/s 7329% 678% 452% 351% -- -70% jsonxs 37.9/s 24861% 2514% 1755% 1415% 236% --

Benchmark performed by running 100 iterations of each comparison subroutine using two identical hashes occupying approximately 4MB of memory each. Tested on a server with 8GB of RAM and powered by a 64bit, 2Ghz AMD Opteron 2212 CPU

- Solution marked as testdeep above is using the eq_deeply method from the Test::Deep package.

- Solution marked as comparedata above is using the Compare method from the Data::Compare package.

- Solution marked as freezethaw above is using the freeze method from the FreezeThaw package on both hashes then compares strings returned by this method.

- Solution marked as storable above is using the freeze method from the Storable package on both hashes, then compares strings returned by this method. In order to achieve comparable results the $Storable::canonical property is set to be true.

- Solution marked as jsonxs is using the encode method from the JSON::XS package on both hashes, then compares strings returned by this method. Before the encode method can be applied, module needs to be configured in order to make sure that it always produces identical output. I've have used the following method in my benchmark:

my $json = new JSON::XS;

$json->indent(0);

$json->space_after(0);

$json->space_before(0);

$json->canonical(1);

return $json->encode($hash) eq $json->encode($hash2);So why did JSON::XS perform so much better than any other comparison/serialization method? First of all JSON format is quite good for serialization/deserialization performance (not so much for the size). But why it's really so much faster is the fact that all the actual heavy lifting is done in a nicely-optimized code written in C. Perl is just a wrapper using an XS Interface.

More cheats?

There are many more Perl XS libraries available on CPAN that can give a decent performance boost to your applications. Aside from JSON::XS we've been using two other XS packages: CSV_XS for parsing/generation of comma-separated values and YAML::XS for YAMLS serialization. Furthermore if you can think of any piece of functionality that would benefit from a C-based implementation, you can create your own Perl XS implementation thought it is not very straight forward and there is certain amount of hacking required.